Research Findings: AI Efficiency in Emergency Medicine Increases, Reduces Mistakes

In a groundbreaking study conducted at a major New York hospital, researchers led by Schacher and colleagues explored the potential of large language models (LLMs) to generate emergency medicine (EM) handoff notes. The study, highlighted by JAMA Network Open, analysed over 1,600 EM patient encounters leading to hospital admissions.

The study aimed to determine if integrating artificial intelligence could reduce the documentation load on physicians without jeopardizing patient outcomes. Two LLMs, RoBERTa and Llama-2, were employed for different aspects of the task, including identifying relevant content and crafting summaries.

Across the 1,600 patient cases, the average patient age was 59.8 years, and women comprised 52% of the sample. Clinicians reviewed a sample of generated notes for completeness, clarity, and safety as part of the study's analysis.

The findings suggest the potential of LLMs to assist with medical documentation. Automated evaluations revealed that LLM-generated summaries often surpassed those written by physicians in terms of detail and alignment with source notes, as indicated by ROUGE-2 scores. However, LLM-generated summaries were generally acceptable but fell short in readability and contextual relevance compared to physician-written notes.

Potential safety risks, including incomplete information and flawed logic, were identified in 8-9% of cases. None of the identified risks were deemed life-threatening. The SCALE approach revealed fewer issues in AI-generated notes compared to physician-written notes.

By automating routine aspects of notetaking, these systems could allow physicians to focus more on direct patient care. The team emphasized the need for ongoing refinement to maintain these criteria.

Interrater reliability assessments among clinicians indicated agreement on criteria such as note correctness and usefulness, but readability ratings were less consistent. By excluding race-based information during model fine-tuning, the researchers hoped to minimize biases in the generated outputs.

The study also examined cases of hallucinated content, a well-documented limitation of AI systems. Hallucinated content, while rare, could potentially lead to misinformation in the handoff notes, underscoring the need for continued refinement and monitoring.

In conclusion, while the study demonstrates the potential of LLMs in generating EM handoff notes, it also highlights the need for ongoing refinement to ensure accuracy, safety, and readability. The findings open up exciting possibilities for the future of AI in medicine, particularly in streamlining documentation processes and allowing healthcare professionals to focus more on direct patient care.

Read also:

- Nightly sweat episodes linked to GERD: Crucial insights explained

- Antitussives: List of Examples, Functions, Adverse Reactions, and Additional Details

- Asthma Diagnosis: Exploring FeNO Tests and Related Treatments

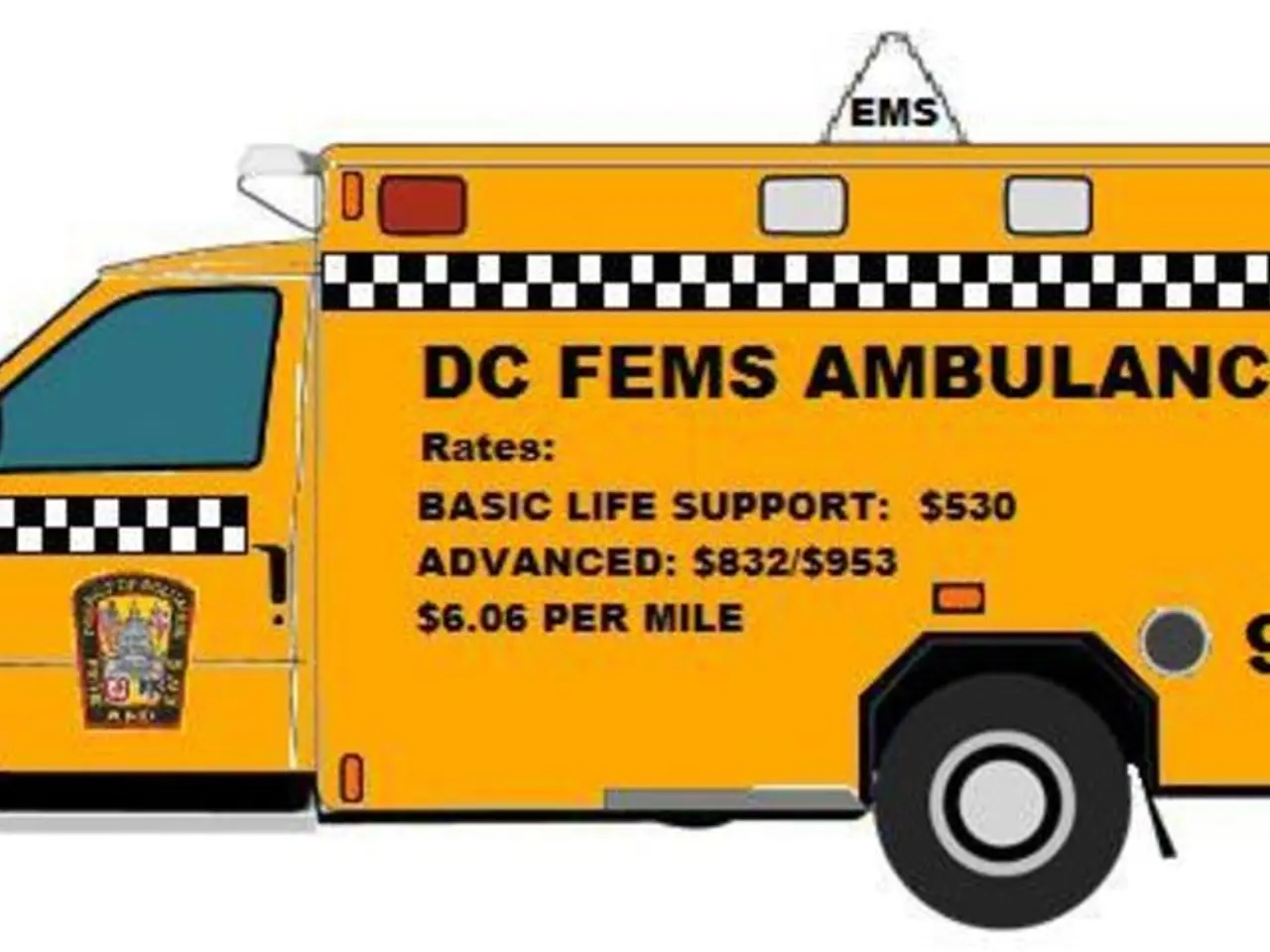

- Unfortunate Financial Disarray for a Family from California After an Expensive Emergency Room Visit with Their Burned Infant